“Right-Sizing” in K8s is WRONG.

“Right-sizing” sounds… well, right - give each service exactly what it needs. In reality, it’s a constant trade-off between waste and risk - and when that balance tips, performance slows, availability falters, and costs rise, often all at once.

We’ve been chasing this “perfect fit” for decades, from bare metal to VMs to containers. And the result? We still overpay, still overengineer, and still get surprised by failures. As systems get more dynamic, those failures happen faster - and cascade harder.

“Right-sizing” is supposed to be the sweet spot: not too much, not too little. But in practice, it’s just guesswork - often based on outdated metrics, gut feeling, or in worst-case, fear.

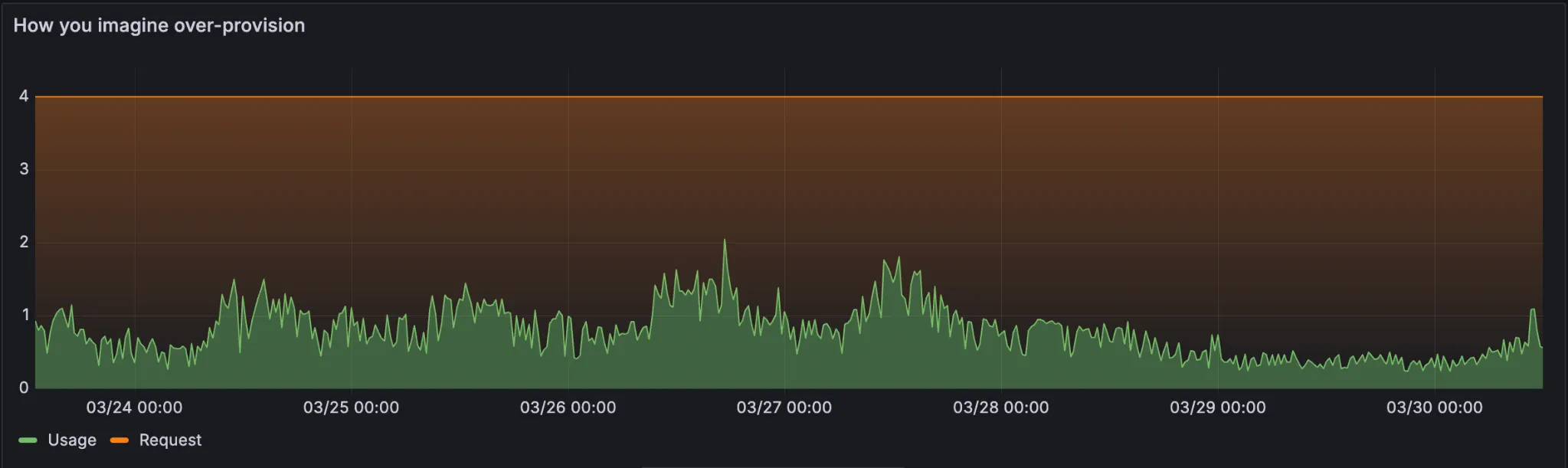

We imagine over-provisioning like this:

The request line is way above actual usage, and all that extra headroom is just wasted resources, so let’s optimize for peaks;

There is still a ton of unused resources.

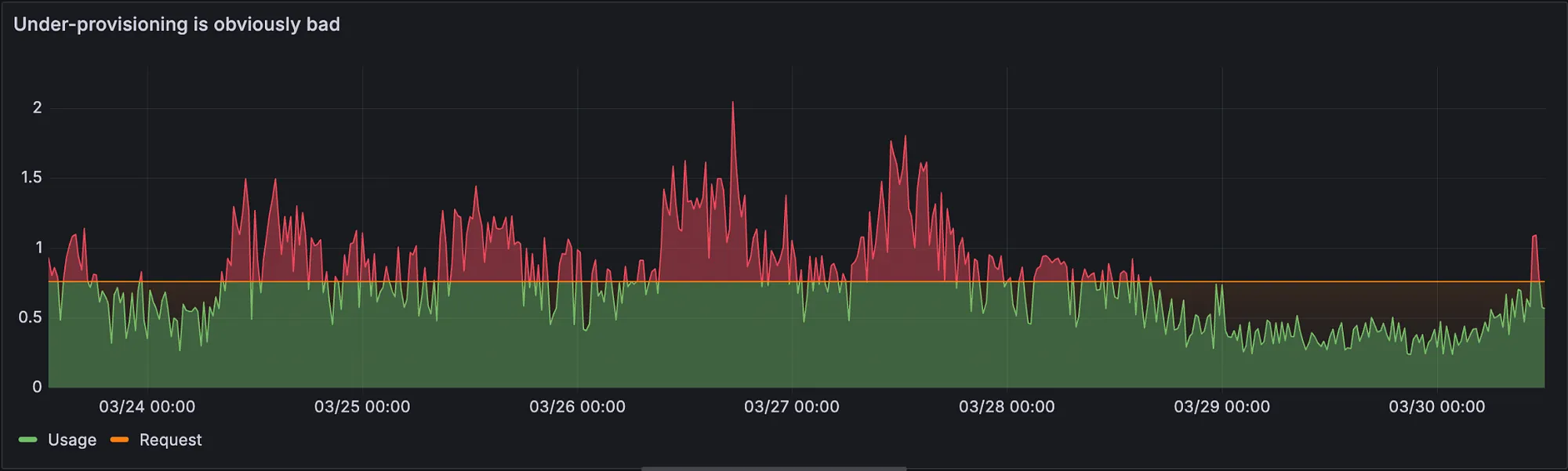

Scale for average utilization?

Now we’re in risky territory - throttling, evictions, noisy neighbors. And this isn’t just theoretical - every slowdown, eviction, or missed CPU cycle can ripple into degraded user experience or even downtime. So let’s get more sophisticated: how about P99?

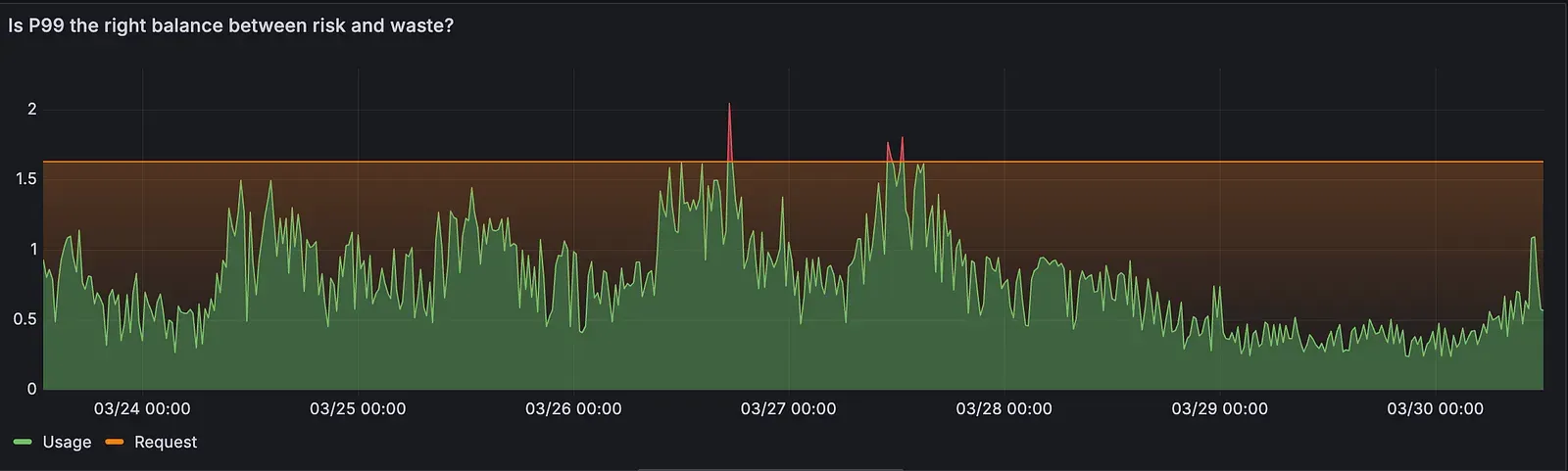

Looking better! right?

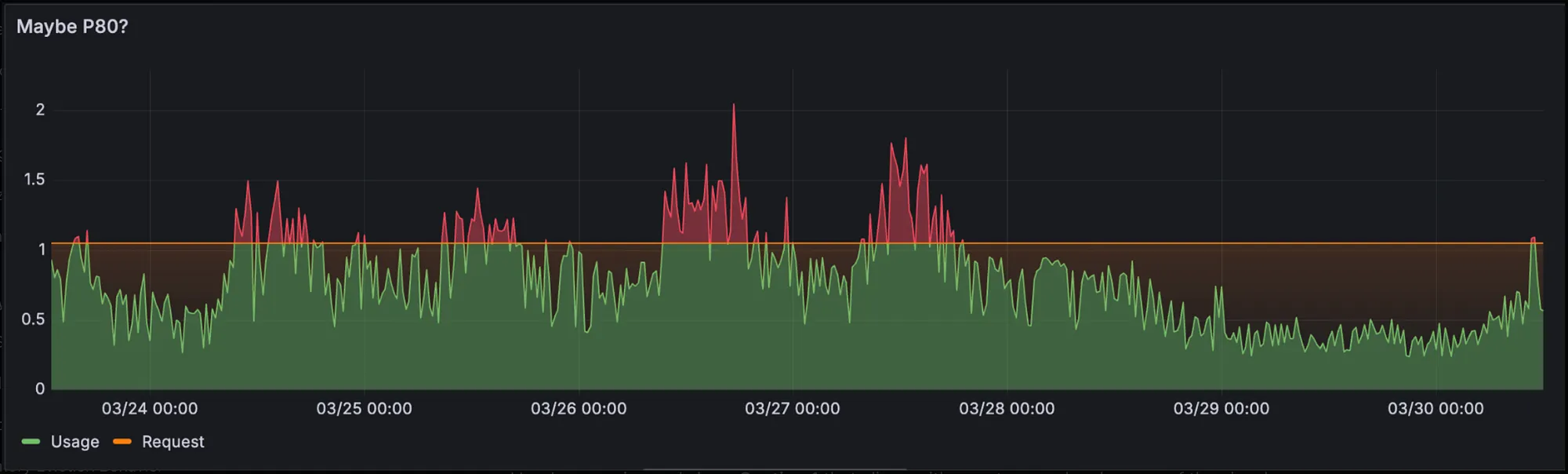

Still imperfect. Some risk and a lot of waste. Maybe P80?

Same story. No matter where you draw the line, there’s no “perfect fit”.

The problem with right-sizing isn’t the math - it’s the assumption behind it.

It assumes a world where workloads are predictable, behavior is stable, and systems are easy to control. In reality, patterns shift constantly, deployments change behavior, and “perfect” sizing decays in hours or days.

Horizontal scaling doesn’t save you either - each replica can have its own variation, and scaling can depends on those same local guesses.

Right-sizing assumes we know the future.

Spoiler: we don’t.

The cloud promised “only pay for what you need.”

But in reality, we pay for what we think we might need.

Because allocation is still done locally - at the pod or VM level - we size for safety (and overpay for idle headroom) - or we gamble with stability and performance.

Local provisioning in the cloud didn’t solve the problem. It just moved it.

We keep adding more reactive layers - autoscalers, reschedulers, vertical tuners, eviction policies - each trying to patch the blind spots of the last.

Instead of solving the trade-off, we’re just:

We’re optimizing in isolation. And isolation doesn’t scale.

Instead of squeezing every pod, node, or replica, zoom out.

Stop treating workloads as islands. Start thinking globally - where resource decisions are made with full context, not just local guesses.

The next step isn’t another scaler. It’s a smarter, interconnected system:

Right-sizing is wrong because the world it assumes doesn’t exist.

Dynamic, global allocation is the only way forward.

This isn’t just a thought experiment.

At 🪄Wand🪄, we’ve built a platform that throws out the “right-sizing” playbook entirely.

Instead of locking each workload into static requests, Wand continuously adjusts at the cluster level - to keep applications fast, responsive, and resilient - even under unpredictable load

We optimize for performance and availability first - so services have the resources they need exactly when they need them. Efficiency and cost savings happen as a byproduct of running your cluster in a healthier, better-balanced state.

No guessing. No firefighting. No endless tuning backlog.

Just a Kubernetes environment that runs lean when it can, and scales instantly when it must.

The result?

If you’re ready to stop right-sizing and start right-running,

📩 Get in touch or drop us a message - and see how much you could save in your own cluster.